In this month’s issue:

- Interview with Arie Wilder, COO auxmoney

- Financial Industry AI Highlights

- AI Model Landscape: 2025’s Fast-Moving Frontier

- What we are reading at Sagard

Interview with Arie Wilder, COO auxmoney

For this month’s newsletter, we interviewed Arie Wilder, auxmoney’s COO, on effectively embedding AI in financial services. Our conversation covered how auxmoney selects AI projects based on clear KPIs, empowers cross-functional teams to build practical AI tools, and rapidly ships impactful solutions both internally and externally.

Here are our top key takeaways from the interview:

- Auxmoney only invests in AI when there is a clear business case and a specific KPI, ensuring that every project delivers measurable value and is not just driven by technology trends. If a simpler solution works just as well, it takes priority.

- AI development is not limited to the tech team; every department is empowered to build their own AI tools using a shared internal platform, with guidance and support from a central team. This approach has led to broad adoption and meaningful impact across the organization.

- A foundation of standardized, high-quality data enables auxmoney to update its credit-risk models far more frequently than competitors and automate significant internal workflows. The company’s investment in clean, accessible data has unlocked major gains in speed and efficiency.

- New AI solutions are always introduced in careful stages, starting in a sandbox environment, then moving to shadow mode, and only reaching full production once they have been tested and refined with human oversight. By building feedback directly into daily workflows, auxmoney ensures that each deployment improves quickly and safely, while minimizing risk.

Let’s dive in.

For people who are not familiar with auxmoney, tell us about the company and your role there. How big is the team, and what roles and skill-sets matter most for AI-driven product development today?

Sure, auxmoney is the leading digital lending platform for consumer credit in Europe. What makes us special are our unique risk models and fully digital processes, which improves access to affordable credit while lowering the risk and cost of lending. I am the Chief Operating Officer and have been with the company pretty much since day one, and I also oversee everything we do in AI.

We are about 300 people, and about half work in data science, engineering, or other analytical roles. AI is not a central ivory-tower function – we embed it across departments. Because of that, the key skillset is not purely technical or business oriented, it is a hybrid: someone who understands the business domain and also the technical and analytical side. Curiosity, a growth mindset, and the willingness to challenge the status quo matter as much as pure technical chops.

In one of our previous conversations, you told me you only use AI where it creates clear value, not for hype. How do you green-light AI projects at Auxmoney?

I strongly believe in focus and simplicity. Before we kick off any “big AI project” we ask a clear question: does AI really deliver a better solution than a simpler approach? Take customer communication: It is tempting to jump straight to a fancy language model, but sometimes the real win is improving self-service so the customer does not need to contact us in the first place. If the problem can be solved with a simple solution like a small process redesign, we do that and move on.

On the other hand, there is real value in analyzing and processing unstructured data to generate unprecedented value in your risk models, product innovation and other areas.

When we do pursue AI initiatives, there must be a concrete KPI – like cost, revenue, risk, speed, or NPS – and a testable hypothesis that AI will move it. Also, we want to be able to get to a prototype quickly, validate the impact, and decide to scale or kill it.

In general, we strongly believe in focus. It is better to nail one thing than juggle ten half-baked experiments that never land.

What AI trends are you seeing in the market, and where can it deliver the most value in fintech?

Some opportunities are universal – automation, operational excellence, and faster innovation cycles. In lending I see two big potentials:

- Risk, underwriting, and fraud prevention. Models that digest unstructured data like text, voice and other patterns in real time give you brand-new signals and insights. That lowers credit risk and improves your models with additional very relevant data.

- Truly individual products and services. We can tailor not just the offer but the tone, channel, even the design to each borrower’s preferences and personal circumstances. Done well, those little “magical moments” compound into serious customer loyalty.

Building with AI often needs a different culture. You let departments build their own AI tools, how did you make that work?

We started by recognising two employee groups. Employees who are used to work with data, quickly understand the opportunities and risks, they know how to experiment with data, think in probabilities and how to build guardrails, so AI felt natural. Other teams like Legal and People for example needed something tangible. The breakthrough was an internal “AI operating system”. Think of it as a controlled sandbox with our internal knowledge, processes, and APIs wired in. Anyone can open a chat-like interface, build an AI assistant or workflow with prompts only, and never risk production data.

A small central AI team acted as on-the-ground ambassadors, supporting teams in building the first use cases. We paired that freedom with clear governance for AI initiatives: Once an initiative is planned to go into production and the compliance review was successful, we like to use it in shadow mode first, then production. Today every department has developed at least one internal AI assistant, and enthusiasm is high because people solve their own pain points.

You update your credit-risk model 10-15x faster than your competitors and also automated a big part of the internal workload. What enables your team to move at this speed?

Three main pillars:

- Data excellence: Clean, well-documented, standardised data flows make experimentation and new feature development easy and quick.

- Modular architecture and an A/B mindset: Everything – risk, product, marketing – is built for rapid test-and-learn, so quick iterations feel normal.

- Empowered teams: Autonomy and responsibility within the teams with clear goals and guardrails, plus a positive error culture. If an experiment flops, we capture the lesson and move forward.

How do you measure success, and has any outcome surprised you?

AI is never an end in itself. We judge AI projects on business metrics first. For example, in customer service, that means automation rate, resolution time, and CSAT. Internally we also track adoption. One surprising outcome to me was how broad and quick AI adoption was internally across all departments. More than 100 internal AI assistants have been built and more than 60% of our employees use our internal AI tools on a daily basis.

Quality and risk mitigation: how do you stay safe while moving fast, and can you share a feedback-loop example?

We never jump straight from prototype to live traffic. The progression normally is sandbox → shadow mode → small, closed live A/B test → full roll-out. An example for shadow mode would be that the AI drafts an e-mail or verification step decision, but a human still sends the final version, so we see exactly where the model shines or fails.

We pay close attention to compounding errors, even small inaccuracies at each step can add up and lead to significant mistakes overall if it is a multi-step process. That’s why we always keep humans in the loop, especially when the stakes are high and mistakes would have major consequences.

Feedback should not be a separate task but be built into the normal workflow. For instance, when a customer care agent overrides a model suggestion, we capture the correction and the reason automatically. We then feed those overrides back into our model to improve accuracy.

What is next on auxmoney’s AI roadmap? Any bold bets this year?

Beyond the areas I mentioned and the vast value we can generate from it, I believe that there is a big potential to develop AI powered individualized product offerings with real-time service for each customer tailored to their individual preferences.

Any parting advice for companies just starting their AI journey?

Get something live – even an internal tool – as fast as possible. This breaks the “AI is scary” mental barrier. Make a business owner, not the data team, accountable for the relevant KPIs. Invest in data quality earlier than feels comfortable, and revisit ideas you once shelved as “too hard.” With today’s tooling they might be a “weekend build” instead of a year-long project.

Financial Industry AI Highlights

Customer experience is more important than ever!

Customer experience has become a fundamental business differentiator, with 80% of customers saying it is as important as the products or services themselves. Leading fintechs are moving beyond basic chatbots and deploying AI that can handle complex financial queries, process transactions, and offer personalized advice.

Some companies are building proprietary AI solutions in-house, leveraging their deep understanding of specific customer needs and regulatory requirements. This approach gives them complete customization and control, but it also requires substantial investment in AI talent and infrastructure.. Other companies are partnering with specialized platforms such as Sierra, Decagon, and Lorikeet to deliver sophisticated AI support more quickly. While these solutions offer faster time to market and proven effectiveness, they provide less flexibility for customization.

A recent BCG survey of over 280 finance executives found that most finance teams are struggling to achieve meaningful ROI from AI, with a median ROI of just 10 percent. The problem isn’t lack of effort or interest; it’s that teams are prioritizing learning over value realization, running disconnected pilots instead of integrated transformations, and chasing low-impact efficiency plays in transactional areas rather than the high-ROI use cases that actually move the needle.

The Winning Playbook: Focus, Collaborate, Execute: The top 20% of performers who achieve 20%+ ROI follow a different playbook entirely. They focus ruthlessly on quick wins over open-ended experimentation, embed AI into broader finance transformation strategies (boosting success rates by 7%), and actively collaborate with IT and vendors instead of building everything in-house. Most surprisingly, they prioritize risk management and advanced forecasting over the accounts payable automation that most teams chase.

AI Model Landscape: 2025’s Fast-Moving Frontier

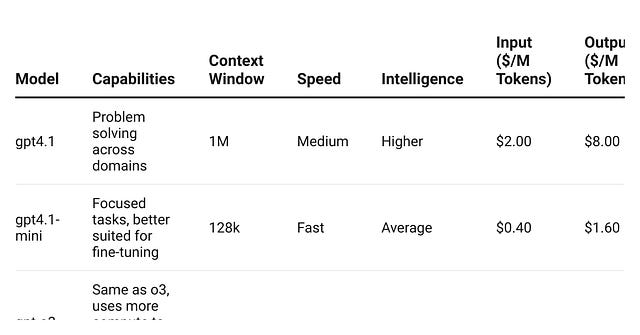

One year ago the default was “just call GPT-4.” Today every team is juggling at least three frontier families (most commonly OpenAI, Anthropic, Google), selecting per-task based on the moving trade-off curve of cost, latency and reasoning depth.

Frontier Models

These are just the flagship models by OpenAI, Google, and Anthropic. If you head over to llm-stats.com, you can see a full list of 100+ proprietary and open source models and how they perform across industry benchmarks. So what model should you pick? We wish there was an easy answer to this, because it the right model really depends on few factors:

- Complexity: how complex is the task? More complex jobs require more intelligent reasoning models.

- Context window: how much context do you need to provide to get the desired output?

- Speed: users expect fast responses while interacting with chat interfaces. But if you are automating pipelines that can run in the background, then slower models might work for you.

- Cost: early in your development process, we recommend you at least test with the best model available, but at scale these costs can add up quickly. At this point, it’s in your best interest to optimize your prompt to get the most out of cheaper models.

- Vendor lock-in: Just because you are with AWS (Claude models are hosted here) doesn’t mean you shouldn’t try out OpenAI or Gemini models. Find the best model for your task and then evaluate the level of effort required to onboard Azure (for OpenAI models) and GCP (Gemini) to access those models. There are some interesting tools like LangDock and OpenRouter that allow you to evaluate all the models from a single interface.

What we are reading at Sagard

- AI Trends: This is the most comprehensive report on AI Trends by Mary Meeker, known as “Queen of the Internet”. Here my top 3 takeaways from from this 340 page report:

- Cost to serve AI models down 99.7% over past 2 years.

- Time to 100MM users: ChatGPT (2 months), TikTok (9 months), Instagram (2.5 years). This is mind-blowing, the speed of adoption is unprecedented.

- Enterprise AI Revenue growth has been incredible. Harvey (LegalTech) grew from 10MM to 70MM ARR in 15 months, Cursor (AI Code Editor) grew from 1MM to 300MM ARR in 5 months. As always, there is an appetite to pay for products that solve real problems and AI helps you solve those problems at scale.

- In January, Goldman Sachs rolled out their in-house AI Assistant to 10,000 employees as a pilot. Five months later, it’s being deployed firmwide to 45,000+ employees. The Assistant can now help summarize documents, draft content, analyze data, and translate research into client-preferred languages, a clear signal that AI copilots are moving from experimentation to everyday utility.

- AlphaEvolve: a coding agent powered by Gemini that actually evolves better algorithms over time. It starts with a basic implementation, generates a bunch of code variants using LLMs, tests how well they perform, and then refines the best ones in a loop, kind of like natural selection, but for code.

- How to Make AI Your Thinking Partner: Great insights on transforming AI from a mere tool into a true thinking partner. This article challenges the conventional focus on prompt engineering, emphasizing instead the importance of cultivating a collaborative mindset with AI. By treating AI as a co-creator rather than just a responder, users can unlock deeper creativity and more meaningful interactions.