In this month’s issue:

- Interview with Ken Lotocki, CPO Conquest Planning

- ChatGPT Pulse: The Beginning of Proactive Agents

- The AI-Powered Automation Revolution: Why 2025 is the Tipping Point

- What we are reading at Sagard

Interview with Ken Lotocki, Chief Product Officer of Conquest Planning

For this month’s newsletter, we spoke with Ken, CPO of Conquest Planning, about how AI is reshaping the delivery of financial advice and what lessons extend more broadly. Our conversation highlighted the importance of trust, the challenge of context, and why the future of AI may be driven by agents rather than standalone models.

- AI should clear the clutter. In knowledge-heavy fields, professionals spend too much time on mundane data collection and calculations. AI can handle that heavy lifting and free humans to focus on relationships, coaching, and execution.

- Auditability matters more than flash. Black-box systems that hallucinate are a non-starter where compliance and trust are required. Expert systems that produce consistent and explainable recommendations are far more valuable.

- Context is the hardest challenge. Teaching models to speak the language of a domain takes repeated training and feedback. Success means the AI understands workflows and terminology the way practitioners do, not just dictionary definitions.

- Agents are the future. The next wave will be intelligent multi-agent systems that operate on approved data sources. This reduces hallucinations, ensures traceability, and enables safe execution of real tasks with the right permissions and authentication.

Let’s dive in.

For readers who may be new to Conquest Planning, can you give us a high-level picture? What exactly is the company building, and how would you summarise your AI thesis in a sentence or two?

Ken: Conquest Planning is about democratising access to financial advice.We are a scalable advice platform that serves the mass market segment to mass affluent to high net worth and the ultra high net worth segment. Conquest supports the end-client and/or financial professionals in constructing financial plans more efficiently and effectively. We operate B2B2C and B2B through banks, and we are live in Canada, the UK, and the US.

At the core is SAM, our Strategic Advice Manager. SAM is an expert system with a library of codified strategies. It analyses client goals, preferences, risk tolerance, cash flow, and taxes, then generates context-specific recommendations. Unlike probabilistic AI, SAM is auditable and consistent. It cannot hallucinate, which makes it safe for Advisors and AI agents to use.

Before you started building SAM, what specific gap or problem did you see in the way financial advice was delivered? What was broken that you wanted to fix?

Ken: Financial planning involves too much mundane work. Advisors spend significant time gathering data, entering it into systems, and crunching numbers instead of connecting with clients. AI can handle the heavy lifting: collecting and organising information, spotting patterns, and even matching people to the right products within regulatory frameworks.

That frees Advisors to become more like behavioural life coaches, focusing on client relationships, execution, and coaching, while models handle repetitive calculations and data manipulation.

Let’s get into the solution. What exactly did you and the team build in order to solve that problem?

Ken: SAM is the core differentiator. It was deliberately designed as an expert system for auditability, compliance, and model risk review. SAM draws from plan sentiment, client data, cash flow, and taxes, then creates a prioritised list of strategies specific to each client.

If two clients are true financial doppelgängers, they will receive the same list. I think of SAM as the answer key at the back of the math textbook, or as a caddie to the golfer. Firms using SAM have seen significant gains in both speed and efficiency when building plans.

SAM is also proactive. As client data changes, or when a new regulation, tax rule, or account type emerges, firms can test in near real time which clients are affected and automatically generate an updated list of strategies.

From the perspective of an Advisor or end-client, how does this change the day-to-day experience? And practically, how quickly do plans adapt when life events or market shifts occur?

Ken: Whether it is a human Advisor, an AI agent, or a client directly interacting with their plan, SAM continuously updates recommendations as circumstances change. Because the strategies are codified, they remain auditable and reviewable.

On top of that, LLMs can make financial literacy more accessible. They can explain complex concepts such as stochastic analysis, annuities, or home equity.

LLMs can enrich advice further by adding relevant details, such as hidden costs tied to a vacation.

My advice to Advisors is simple: embrace the benefits. Ask yourself what part of the job you dislike most, because that is usually what AI can take off your plate.

Beyond SAM itself, are you developing the other AI capabilities in-house, or are you more focused on buying and integrating existing solutions?

Ken: Beyond SAM, we are on the buy side. Our strategy is to integrate best-in-class tools rather than build them ourselves. For example, we are a Salesforce shop for sales and support, and we are exploring Agentforce for first-line support since we see a lot of repetitive questions. We are also evaluating federated search because we have a large amount of support data, change logs, and account management notes. An online model could quickly answer things like “Why did the Advisor do this?” or “How many open tickets are there?”

For research, we rely on external tools to provide baseline context on complicated financial concepts like estate trusts, taxes or investment products. We also use existing tools for content generation. And on the engineering side, our developers are testing coding assistants like Cursor, Amazon Q Developer, and Claude Code to help speed up software development.

Given how sensitive financial data is, how are you making sure these systems are safe, compliant, and acceptable to your bank partners, especially as Ask SAM rolls out?

Ken: Security and compliance come first. Ask SAM will only pull from internal sources like Confluence, Jira, and our knowledge base. We will never rely on the open internet for calculations, and we host the models on the same servers as our app and calculation engine. To further protect client data, we use obfuscation so that personally identifiable information is never exposed. In most cases, high-level demographics, or even province/state/country, are enough when external context is required.

On the model side, we are not building our own LLM. Instead, we test different options such as Google’s Gemini, Anthropic’s Claude, and OpenAI’s GPT, and select the best model for the task. This model-agnostic approach means we can adapt if partners or regulators require a specific configuration. Large banks, for example, may prefer to run their own trained LLMs with strict guardrails, and Ask SAM is designed to work in that environment.

Every startup hits a wall somewhere. For Conquest, what has been the hardest part so far in bringing SAM and the AI stack to life?

Ken: The hardest challenge has been context, teaching models to “speak Conquest.” For example, in Conquest context, you create a retirement goal, then go to planning and solve it, but someone might say “I want to stop working at 65”. That difference sounds small, but it matters for how the model interprets instructions.

Take education planning as an example. An Advisor should not have to key in tuition costs. They should be able to ask for the average McGill tuition for four years on campus, and have that value written directly into our JSON for the education model.

This requires training and feedback. During user acceptance testing, someone might say “add my salary” and the model will not respond. We then teach it to recognise that “salary” and “income” are the same. It is a process of refining language and behaviour until the model naturally understands the way Advisors think and work.

Looking ahead to the near term, what are the key roadmap items or milestones we should expect from Conquest?

Ken: Ask SAM is coming soon. On data migration, we are adding an integration layer that can take unstructured data, including competitor client reports, load it into an LLM, write it to our JSON, and map fields between systems. With human review, this will meaningfully change how migrations are handled.

The same approach could also support open banking alternatives. Instead of screen scraping, a client could grant access to their bank PDFs and documents, and SAM could build a plan directly from that unstructured data.

On the output side, LLMs open up new ways to present plans. That includes personalised explanations such as “teach it to me like I am five,” “explain what my Advisor is doing,” or even AI avatars that deliver insights when the client is ready.

Finally, stepping back from Conquest specifically, which broader AI trends are you watching most closely as it relates to financial services?

Ken: MCP and agents are where the real shift is happening. What is new is intelligent, multi-agent systems that operate on approved data sources, which reduces hallucinations. With generic LLMs, you often do not know where the answer came from. I have seen outputs cite Wikipedia or Reddit, and that is not the reliability financial services needs.

Agents that have an approved MCP connection build confidence because they use trusted sources, and they also create a role for people to keep those sources current. Someone still has to write and update the knowledge base so the model can deliver accurate answers in plain language. I have been digging into MCP, and it is still early but promising.

My practical vision is simple. As a banking client, I want to reduce the time spent on simple or mundane financial tasks. If the agent has the right permissions and authentication, I should be able to say ‘send 100 dollars for a fantasy football draft,’ and it will do so safely because it recognizes the approved recipient. The time saved could be then spent on the more complicated questions I may need my financial institution or my financial advisor to answer like ‘can I retire?’. That is the direction I expect the industry to go.

ChatGPT Pulse: The beginning of Proactive Agents

Last week, OpenAI announced ChatGPT Pulse for Pro users, their first proactive agent that brings updates to you instead of waiting for your prompt. Pulse makes recommendations, follows up on conversations, and curates content from your calendar and email, all inside ChatGPT.

The key line from the announcement:

Each night, it synthesizes information from your memory, chat history, and direct feedback to learn what’s most relevant to you, then delivers personalized, focused updates the next day.

Zoom out, and this points to a bigger shift: AI that anticipates needs across data and delivers synthesis at the right level. Pulse today relies on your ChatGPT history. In the enterprise, the equivalent is meeting notes, call transcripts, and Slack or Teams threads. These conversational signals show what matters now and what is likely to matter next.

But conversations alone are not enough. To be truly proactive, an agent also has to merge that running context with structured systems like CRM, finance, and ERP, along with unstructured sources like docs, code, and tickets. Today those sit in silos, which is why we still fall back on dashboards and manual queries. Once unified, the agent can connect the dots, surface what matters next, and move work forward. That is when agents stop being assistants you query and start becoming proactive collaborators.

The AI-Powered Automation Revolution: Why 2025 is the Tipping Point

Automation Spending Surges

A new industry report from Redwood Software reveals a powerful transformation: 73% of companies increased their automation spending in the past year, with nearly 40% reporting cost reductions of 25% or more. The message is clear, automation has evolved from nice-to-have to business-critical.

The data tells the story: 70% of businesses now view automation as central to competitiveness, while 65% expect AI to dramatically enhance their automation capabilities. For firms in finance and private investment like Sagard, this signals we’re entering an era where intelligent automation isn’t just an advantage, it’s table stakes.

Source: Redwood Software “Enterprise Automation Index 2025” (conducted independently with Leger Opinion, surveying 285 industry practitioners)

The Intelligence Upgrade

While automation has existed for decades, recent AI advances have fundamentally transformed what’s possible:

1. Conquering Unstructured Data

Previously, automating processes involving unstructured inputs, like personalizing emails or interpreting varied user formats, was complex or impossible. Today’s LLMs can ingest messy, unstructured data and output clean, structured formats (like JSON) that seamlessly feed into automation pipelines.

2. Intelligent Error Reduction

AI doesn’t just execute tasks; it adds intelligence throughout the process. Modern automation can classify data, add contextual metadata, and catch edge cases that would traditionally cause failures, dramatically improving pipeline reliability and output quality.

3. Agentic Workflows

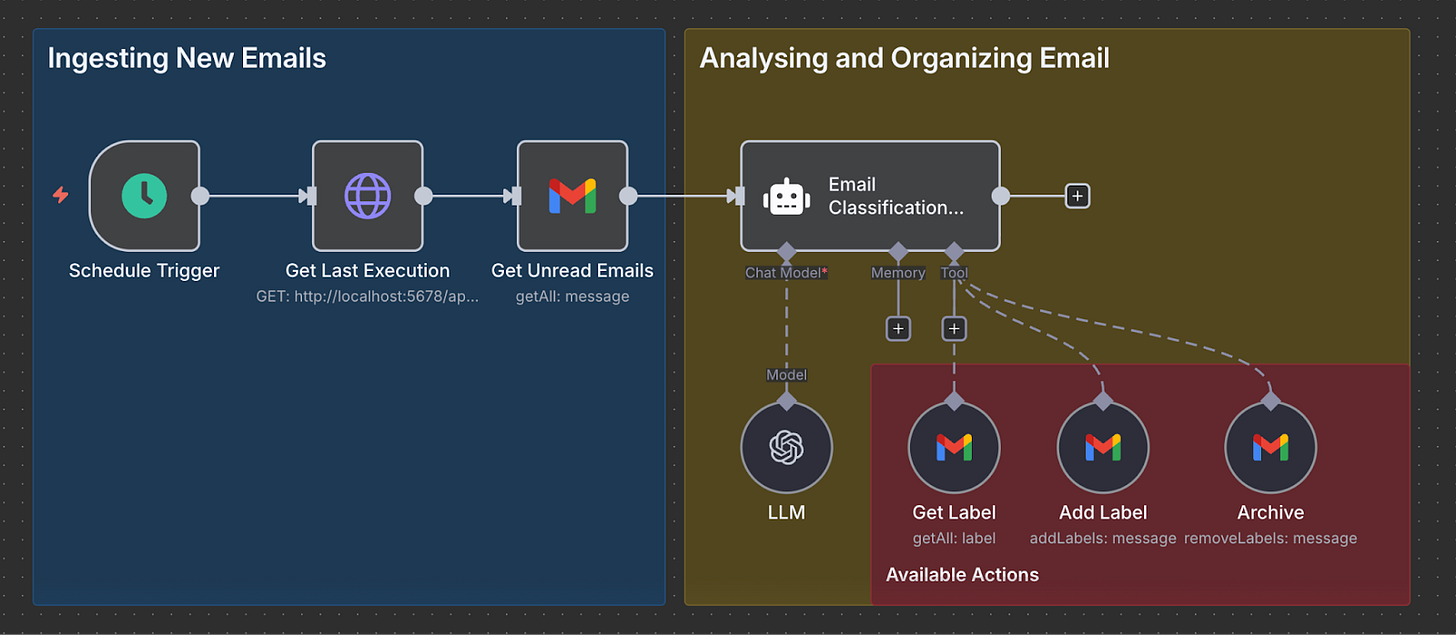

Platforms like n8n now offer sophisticated agentic capabilities. An automation can monitor your inbox, intelligently classify emails, apply appropriate labels, and autonomously decide whether to archive or escalate, delivering a cleaner, more manageable workflow without human intervention.

4. Democratized Development

AI has collapsed the technical barriers to automation. What once required hours of documentation reading and debugging can now be solved with simple AI-assisted development. This opens automation creation to non-technical team members, accelerating company-wide adoption.

Automation in Action at Sagard

We’re putting these principles into practice with three core workflows:

🔍 Intelligent Email Classification

Automatically categorizes and routes incoming emails based on content analysis, Potentially reducing manual sorting by up to 80%, based on preliminary internal assessments.

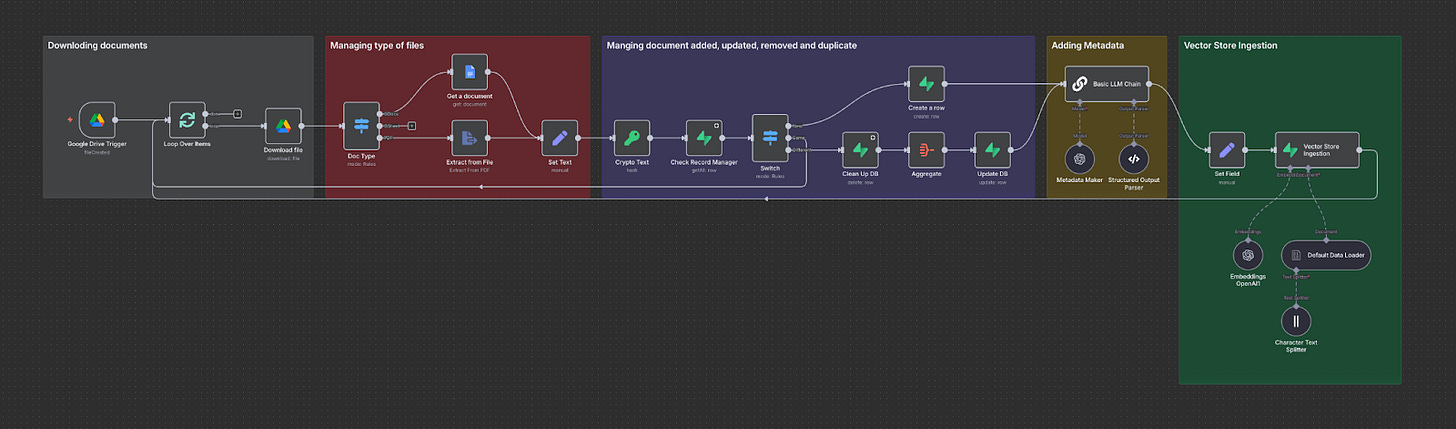

📚 Dynamic Knowledge Base (RAG Pipeline)

Monitors Google Drive folders and automatically updates our vector database, ensuring our AI knowledge base agent always has access to the latest information.

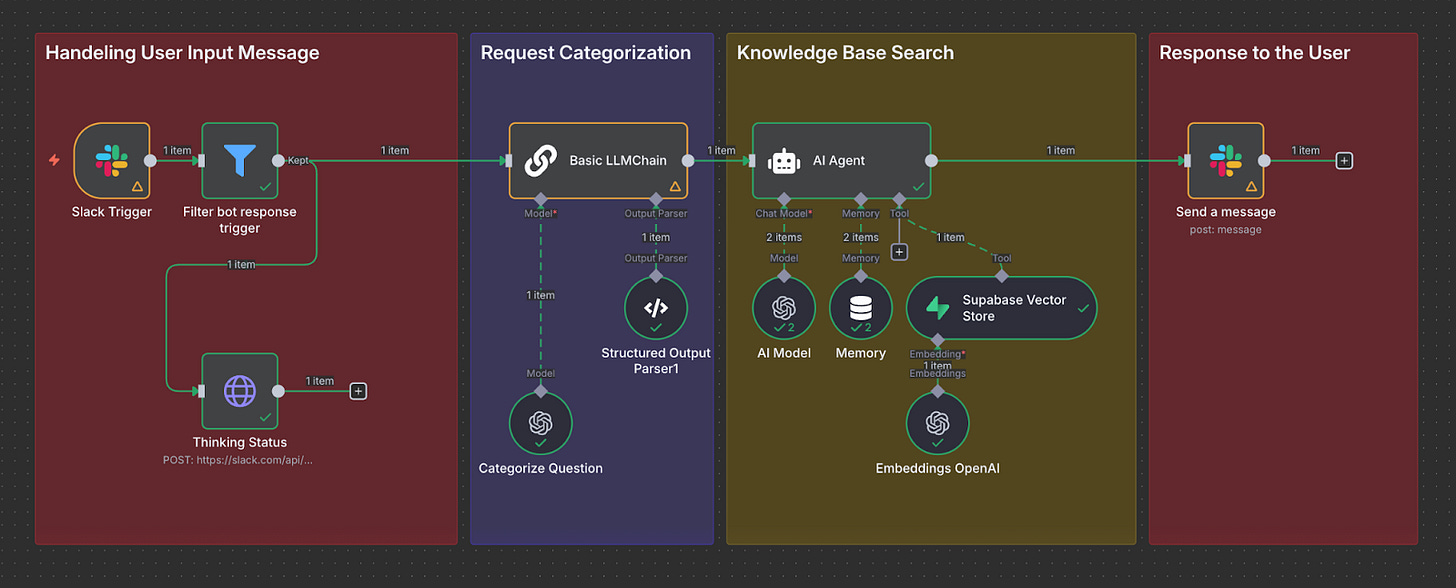

🤖 Integrated AI Assistant

Slack-integrated chatbot that instantly answers team questions about IT, compliance, and HR policies, reducing the load on our operation team.

The bottom line: We’re at an inflection point where AI-powered automation isn’t just changing how we work, it’s redefining what’s possible. We believe, the organizations that embrace this shift today will set the pace for tomorrow.

What we are reading at Sagard

- Announcing Agent Payments Protocol (AP2) | Google Cloud Blog

Google rolled out AP2, an open protocol to let AI agents handle payments directly. The idea is to standardise how agents prove what a user wanted to buy, what was in the cart, and how payment was authorised, so there’s a clean trail for audits and disputes. Over 60 partners are backing it at launch. If you’re thinking about agent-driven checkout, AP2 takes care of the plumbing, but you’ll still need your own fraud, compliance, and KYC controls. - Measuring the performance of our models on real-world tasks | OpenAI

OpenAI’s new benchmark, GDPval, tests models on actual work outputs such as slides, spreadsheets, and documents across 44 professions. On their “gold set” of 220 tasks, Claude Opus 4.1 stood out on design and formatting, while GPT-5 was strongest on accuracy. Performance has more than doubled since GPT-4o in 2024, and models now deliver results about 100× faster and cheaper than human experts (in raw inference). It is a strong signal of progress, but real-world adoption still requires oversight and iteration. - How people are using ChatGPT | OpenAIOpenAI looked at 1.5M chats and found usage has gone mainstream. Women now make up more than half of users (up from 37% in early 2024), and use cases split roughly 30% work and 70% personal. Most behaviour clusters into Asking (fact-finding), Doing (help with tasks), and Expressing (creative or reflective writing). Worth asking yourself: are you running this kind of analysis on your own product usage data, and are your prompt and evaluation sets keeping up with how customers actually query your product?

- VaultGemma: The world’s most capable differentially private LLM

Google released VaultGemma, a 1B-parameter model trained with differential privacy. That means it adds carefully tuned “noise” during training so no single person’s data can be reverse-engineered from the model. Performance still lags non-private models, but the gap is closing. For anyone building in highly regulated settings, it’s a useful baseline that shows private training at scale is now realistic.